Martin Feick

Doctoral Researcher

German Research Center for Artificial Intelligence

Martin Feick

Doctoral Researcher

Saarland Informatics Campus

German Research Center for Artificial Intelligence

My name is Martin Feick, and I am a 5th year PhD candidate in the Ubiquitous Media Technology Lab (UMTL) at Saarland University, and a researcher in the Cognitive Assistants Department at the German Research Center for Artificial Intelligence (DFKI) in Germany. During my PhD, I was a visiting researcher in the CSAIL at the Massachusetts Institute of Technology, MIT (USA).

I finished my Master’s Thesis research in Human-Computer Interaction (HCI) at the University College London, UCLIC (United Kingdom). During my master studies, I also worked part-time as a HCI researcher at Saarland University, HCI-lab. I previously spent six months in the ILab at the University of Calgary (Canada) writing my Bachelor’s thesis. I hold a Master and Bachelor of Sciences in Applied Computer Science from the Saarland University of Applied Sciences.

My Research

“My doctoral research explores how we can manipulate users’ visual perception to create novel, immersive and interactive experiences that seamlessly integrate into reality.“

Portfolio

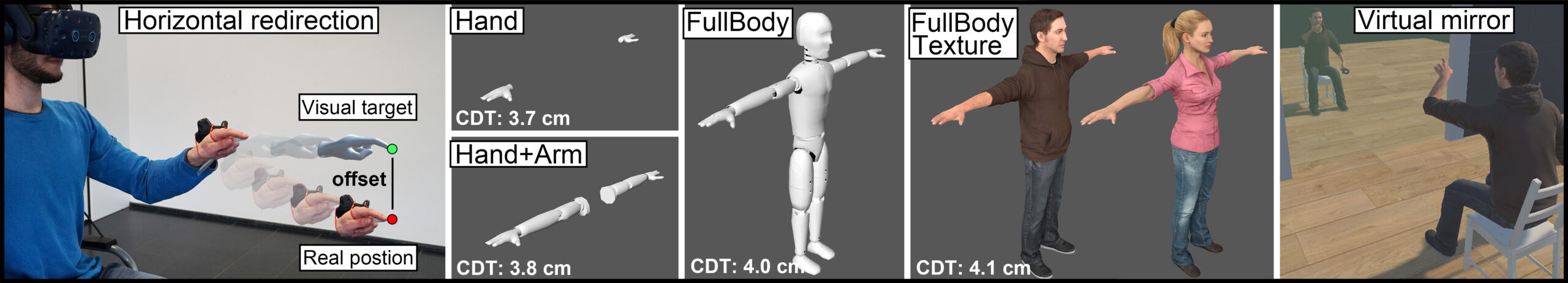

The Impact of Avatar Completeness on Embodiment and the Detectability of Hand Redirection in Virtual Reality

To enhance interactions in VR, many techniques introduce offsets between the virtual and real-world position of users’ hands. Nevertheless, such hand redirection (HR) techniques are only effective as long as they go unnoticed by users—not disrupting the VR experience.

See more

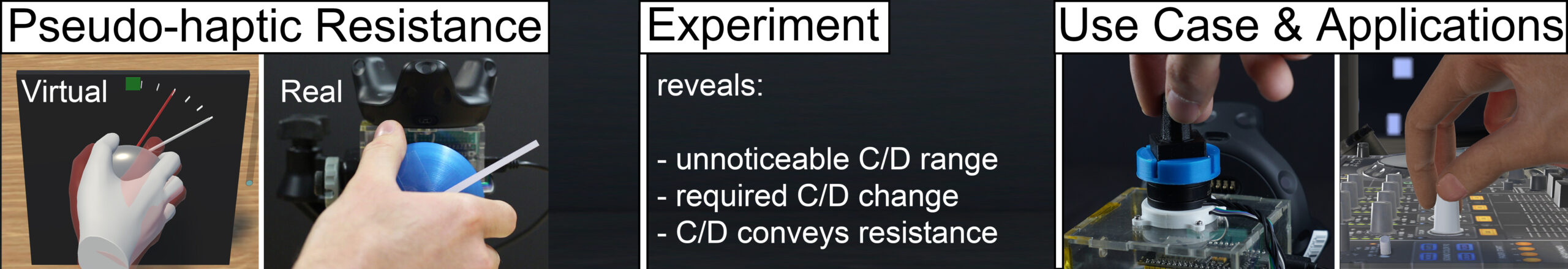

Turn-It-Up: Rendering Resistance for Knobs in Virtual Reality through Undetectable Pseudo-Haptics

In this work, we explore providing haptic feedback for rotational manipulations, e.g., through knobs. We propose the use of a Pseudo-Haptic technique alongside a physical proxy knob to simulate different physical resistances.

Proceedings of the 2023 ACM Symposium on User Interface Software and Technology (UIST-23) Full paper

See more

In a psychophysical experiment with 20 participants, we found that designers can introduce unnoticeable offsets between real and virtual rotations of the knob, and we report the corresponding detection thresholds. Based on these, we present the Pseudo-Haptic Resistance technique to convey physical resistance while applying only unnoticeable pseudo-haptic manipulation. Additionally, we provide a first model of how C/D gains correspond to physical resistance perceived during object rotation, and outline how our results can be translated to other rotational manipulations. Finally, we present two example use cases that demonstrate the versatility and power of our approach.

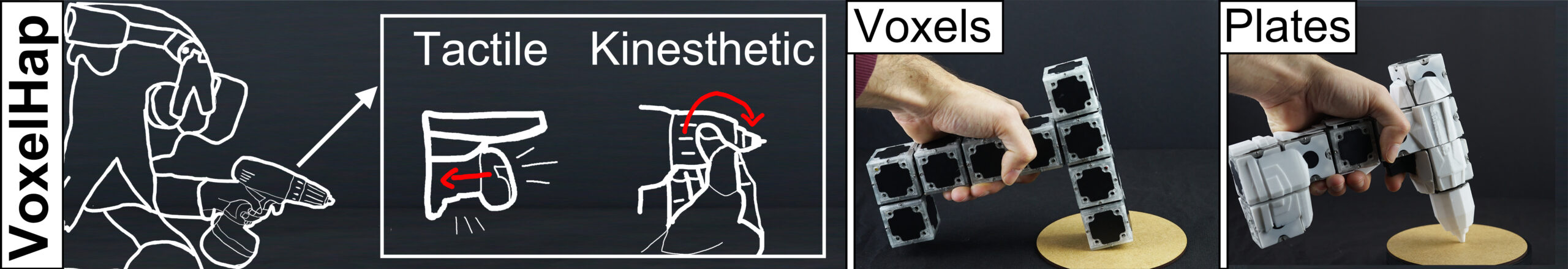

VoxelHap: A Toolkit for Constructing Proxies Providing Tactile and Kinesthetic Haptic Feedback in Virtual Reality

In this work, we present the VoxelHap toolkit which enables users to construct highly functional proxy objects using Voxels and Plates. Voxels are blocks with special functionalities that form the core of each physical proxy. Plates increase a proxy’s haptic resolution, such as its shape, texture or weight.

Proceedings of the 2023 ACM Symposium on User Interface Software and Technology (UIST-23) Full paper

See more

Beyond providing physical capabilities to realize haptic sensations, VoxelHap utilizes VR illusion techniques to expand its haptic resolution. We evaluated the capabilities of the VoxelHap toolkit through the construction of a range of fully functional proxies across a variety of use cases and applications. In two experiments with 24 participants, we investigate a subset of the constructed proxies, studying how they compare to a traditional VR controller. First, we investigated VoxelHap’s combined haptic feedback and second, the trade-offs of using ShapePlates. Our findings show that VoxelHap’s proxies outperform traditional controllers and were favored by participants.

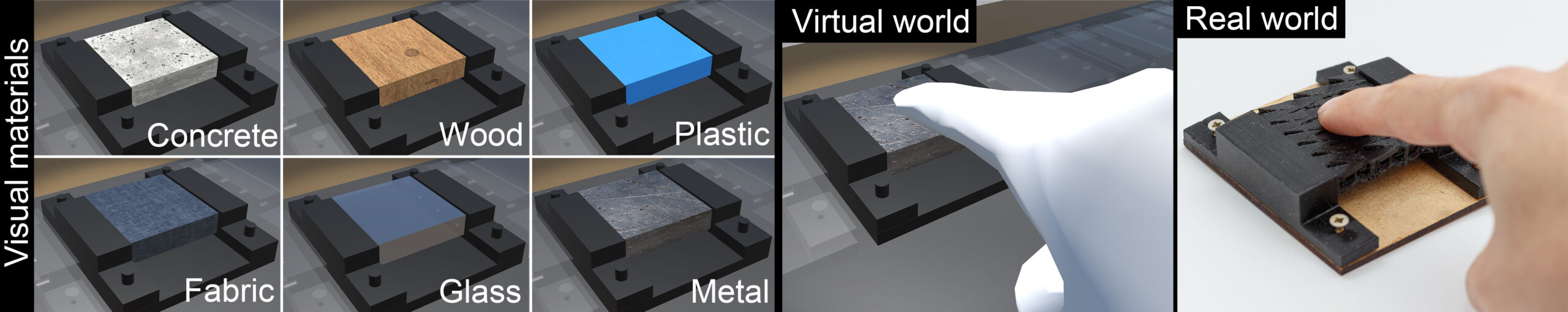

MetaReality: Enhancing Tactile Experiences using Actuated 3D-printed Metamaterials in Virtual Reality

In this work, we designed five different metamaterial patterns based on features that are known to affect tactile properties.

We evaluated whether our samples were able to successfully convey different levels of roughness and hardness sensations at varying levels of compression.

Frontiers in Virtual Reality. Special issue: Beyond Touch. Journal article

See more

While we found that roughness was significantly affected by compression state, hardness did not seem to follow the same pattern.

In a second study, we focused on two metamaterial patterns showing promise for roughness perception and investigated their visuo-haptic perception in Virtual Reality. Here, eight different compression states of our two selected metamaterials were overlaid with six visual material textures. Our results suggest that, especially at low compression states, our metamaterials were the most promising ones to match the textures displayed to the participants. Additionally, when asked which material participants perceived, adjectives, such as ”broken” and ”damaged” were used. This indicates that metamaterial surface textures could be able to simulate different object states.

Our results underline that metamaterial design is able to extend the gamut of tactile experiences of 3D-printed surfaces structures, as a single sample is able to reconfigure its haptic sensation through compression.

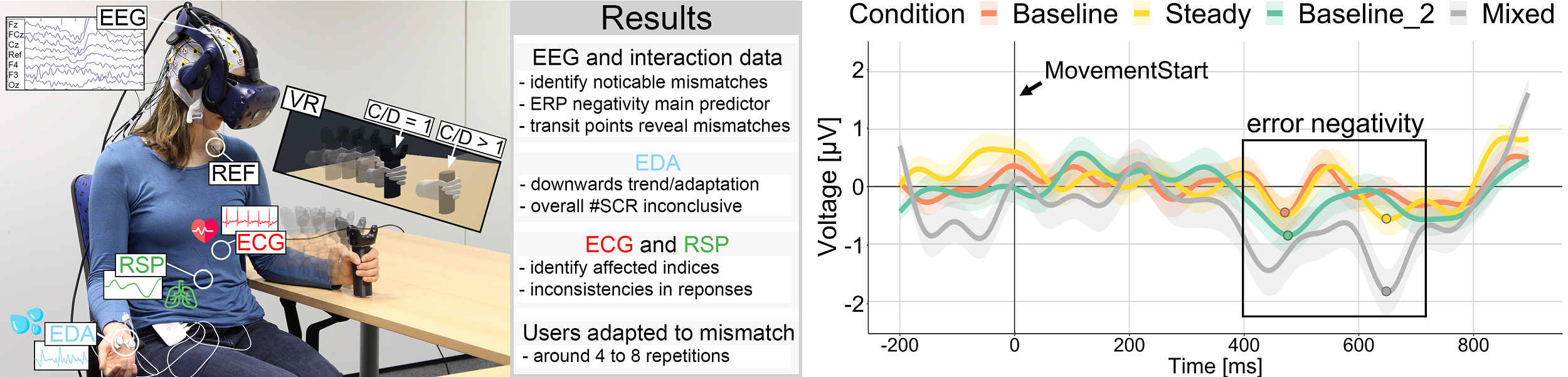

Investigating Noticeable Hand Redirection in Virtual Reality using Physiological and Interaction Data

Hand redirection is effective so long as the introduced offsets are not noticeably disruptive to users. In this work we investigate the use of physiological and interaction data to detect movement discrepancies between a user’s real and virtual hand, pushing towards a novel approach to identify discrepancies which are too large and therefore can be noticed.

Proceedings of the 2023 IEEE Conference on Virtual Reality and 3D User Interfaces (IEEE VR’23) Full paper

See more

We ran a study with 22 participants, collecting EEG, ECG, EDA, RSP, and interaction data. Our results suggest that EEG and interaction data can be reliably used to detect visuo-motor discrepancies, whereas ECG and RSP seem to suffer from inconsistencies. Our findings also show that participants quickly adapt to large discrepancies, and that they constantly attempt to establish a stable mental model of their environment. Together, these findings suggest that there is no absolute threshold for possible non-detectable discrepancies; instead, it depends primarily on participants’ most recent experience with this kind of interaction.

MoVRI: The Museum of Virtual Reality Illusions

We demonstrate the Museum of Virtual Reality Illusions (MoVRI). In contrast to a physical museum, the MoVRI is not a real world building, but an interactive VR application. Moreover, the museum does not exhibit pieces of art, but famous ”pieces” of scientific VR research: a collection of VR illusion techniques.

Saarland Informatics Campus (2023) Demo

See more

In the last decades, many different kinds of illusions have been presented in the VR research field; yet, how these illusions feel, mostly remains abstract for the reader. Therefore, we designed and implemented MoVRI, allowing visitors to experience various illusions displayed in exhibition rooms. Visitors can explore the museum by natural locomotion and enter the exhibition rooms they are most interested in. As a result, MoVRI empowers novices and VR experts to experience VR illusions at first hand, that would otherwise remain inaccessible.

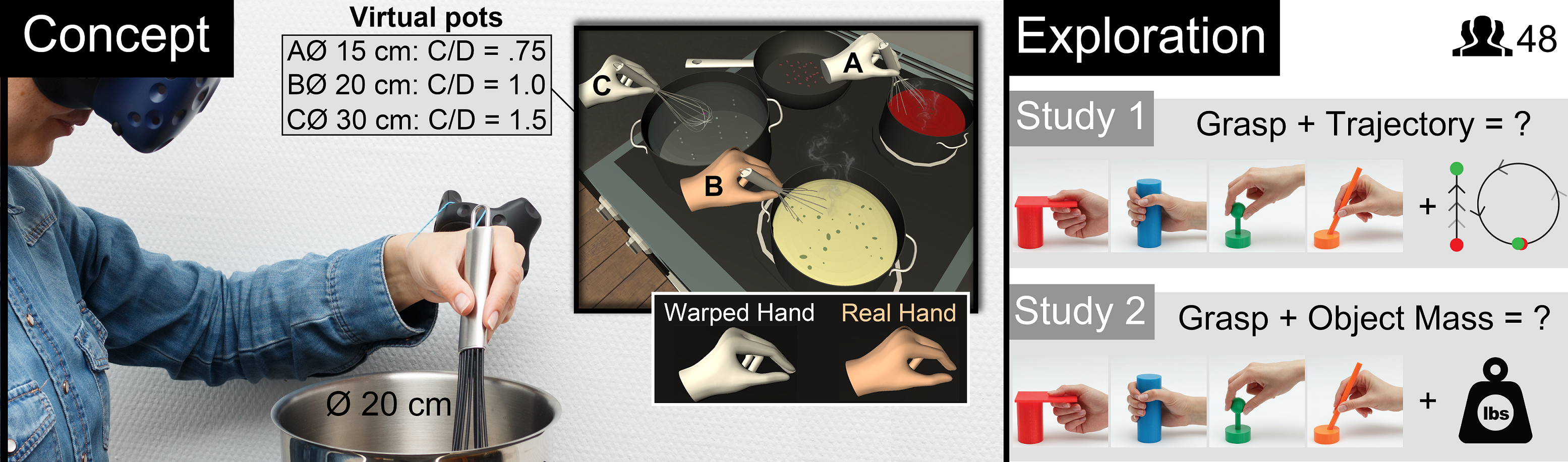

Designing Visuo-Haptic Illusions in Virtual Reality: Exploration of Grasp, Movement Trajectory and Object Mass:

Visuo-haptic illusions are a method to expand proxy-based interactions in VR by introducing unnoticeable discrepancies between the virtual and real world. Yet how different design variables affect the illusions with proxies is still unclear.

Proceedings of the 2022 CHI Conference on Human Factors in Computing Systems (CHI-22) Full paper

See more

The toolkit comes with a pre-installed set of standard questionnaires such as NASA TLX, SSQ and SUS Presence questionnaire. Our system aims to lower the entry barrier to use questionnaires in VR and to significantly reduce development time and cost needed to run pre-, in between- and post-study questionnaires.

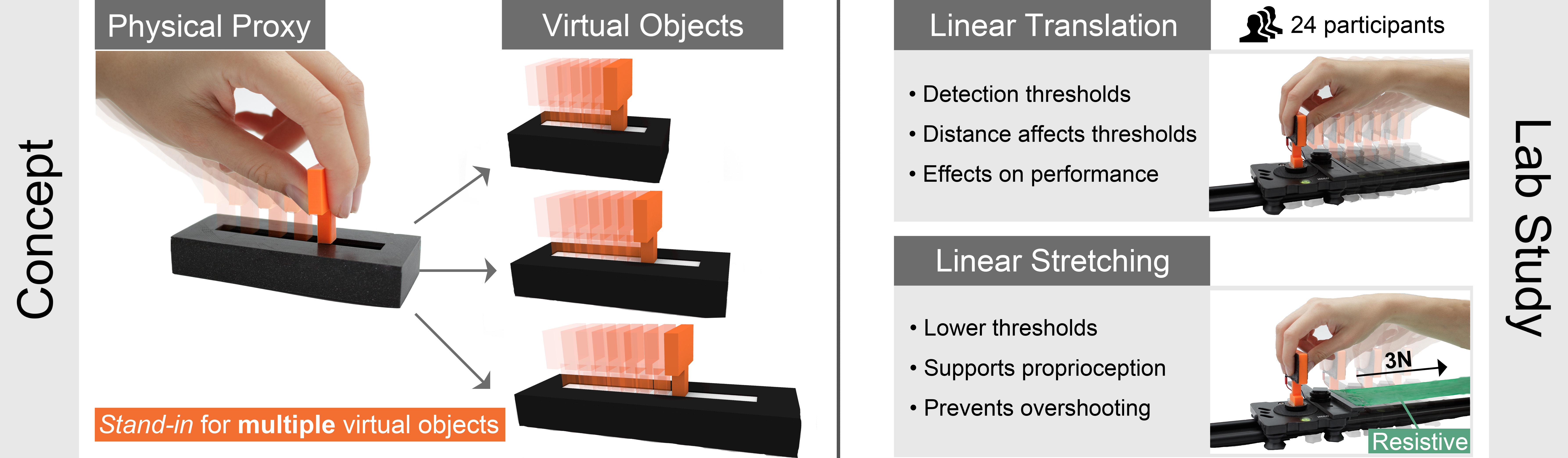

Visuo-haptic Illusions for Linear Translation and Stretching using Physical Proxies in Virtual Reality:

Providing haptic feedback when manipulating virtual objects is an essential part of immersive virtual reality experiences; however, it is challenging to replicate all of an object’s properties and characteristics.

Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems (CHI-21) Full paper

See more

The toolkit comes with a pre-installed set of standard questionnaires such as NASA TLX, SSQ and SUS Presence questionnaire. Our system aims to lower the entry barrier to use questionnaires in VR and to significantly reduce development time and cost needed to run pre-, in between- and post-study questionnaires.

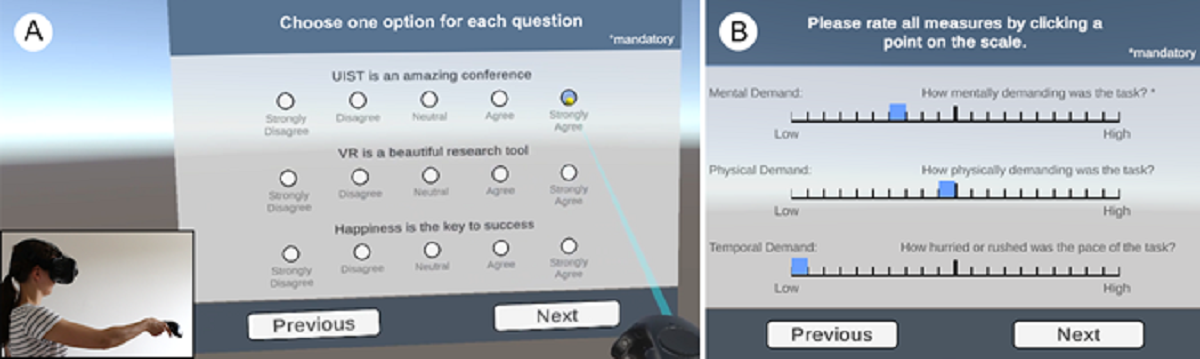

The Virtual Reality Questionnaire Toolkit:

In this work, we present the VRQuestionnaireToolkit, which enables the research community to easily collect subjective measures within virtual reality (VR). We contribute a highly customizable and reusable open-source toolkit which can be integrated in existing VR projects rapidly.

Proceedings of the 2021 ACM Symposium on User Interface Software and Technology (UIST-21) Work in Progress

See more

The toolkit comes with a pre-installed set of standard questionnaires such as NASA TLX, SSQ and SUS Presence questionnaire. Our system aims to lower the entry barrier to use questionnaires in VR and to significantly reduce development time and cost needed to run pre-, in between- and post-study questionnaires.

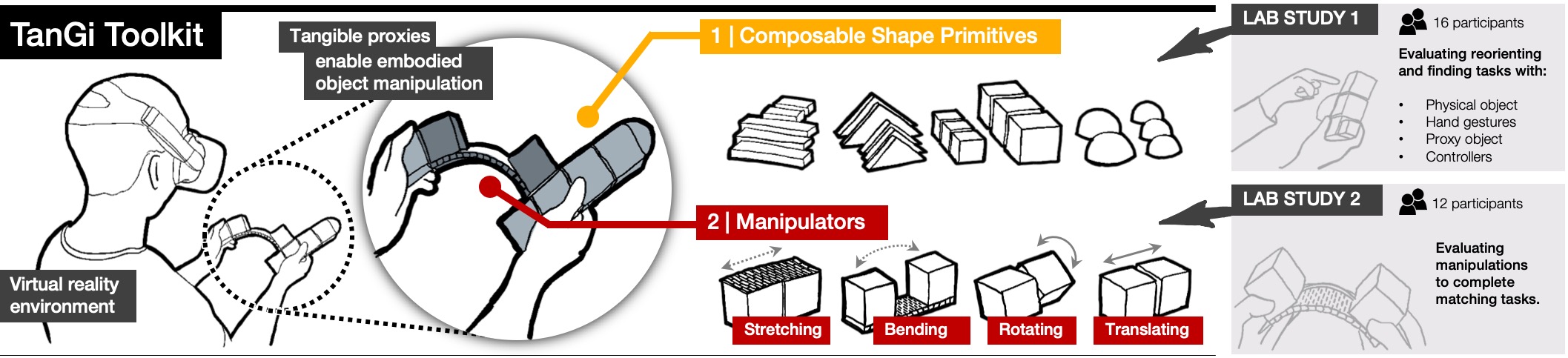

TanGi: Tangible Proxies For Embodied Object Exploration And Manipulation In Virtual Reality:

Exploring and manipulating complex virtual objects is challenging due to limitations of conventional controllers and free-hand interaction techniques. We present the TanGi toolkit which enables novices to rapidly build physical proxy objects using Composable Shape Primitives.

Proceedings of the 2020 IEEE International Symposium on Mixed and Augmented Reality (ISMAR-20) Full paper

See more

TanGi also provides Manipulators allowing users to build objects including movable parts, making them suitable for rich object exploration and manipulation in VR. With a set of different use cases and applications we show the capabilities of the TanGi toolkit and evaluate its use. In a study with 16 participants, we demonstrate that novices can quickly build physical proxy objects using the Composable Shape Primitives and explore how different levels of object embodiment affect virtual object exploration. In a second study with 12 participants we evaluate TanGi’s Manipulators and investigate the effectiveness of embodied interaction. Findings from this study show that TanGi’s proxies outperform traditional controllers and were generally favored by participants.

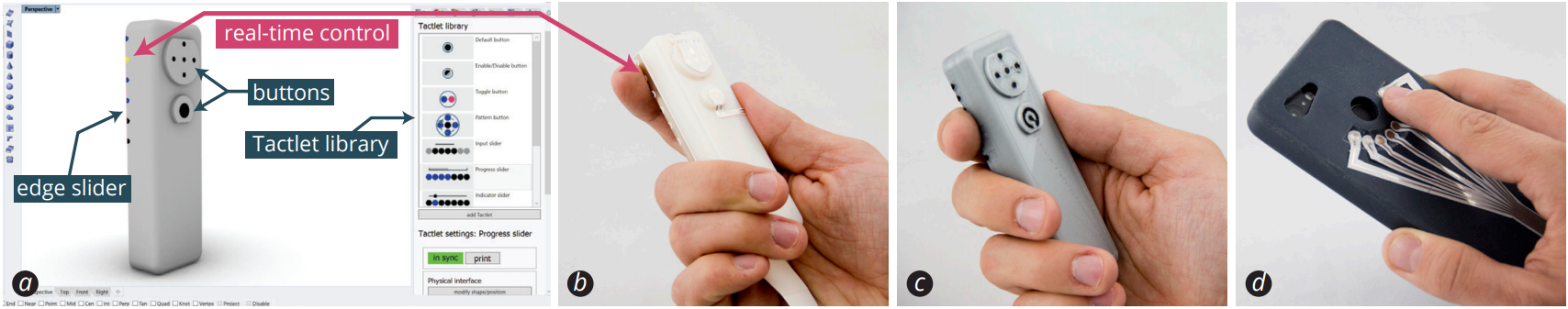

Tactlets: Adding Tactile Feedback to 3D Objects Using Custom Printed Controls

Rapid prototyping of haptic output on 3D objects promises to enable a more widespread use of the tactile channel for ubiquitous, tangible, and wearable computing.

Proceedings of the 2019 ACM Symposium on User Interface Software and Technology (UIST-19) Co-author Full Paper + Demo

See more

Existing prototyping approaches, however, have limited tactile output capabilities, require advanced skills for design and fabrication, or are incompatible with curved object geometries. In this paper, we present a novel digital fabrication approach for printing custom, high-resolution controls for electro-tactile output with integrated touch sensing on interactive objects. It supports curved geometries of everyday objects. We contribute a design tool for modeling, testing, and refining tactile input and output at a high level of abstraction, based on parameterized electro-tactile controls. We further contribute an inventory of 10 parametric Tactlet controls that integrate sensing of user input with real-time electro-tactile feedback. We present two approaches for printing Tactlets on 3D objects, using conductive inkjet printing or FDM 3D printing. Empirical results from a psychophysical study and findings from two practical application cases confirm the functionality and practical feasibility of the Tactlets approach.

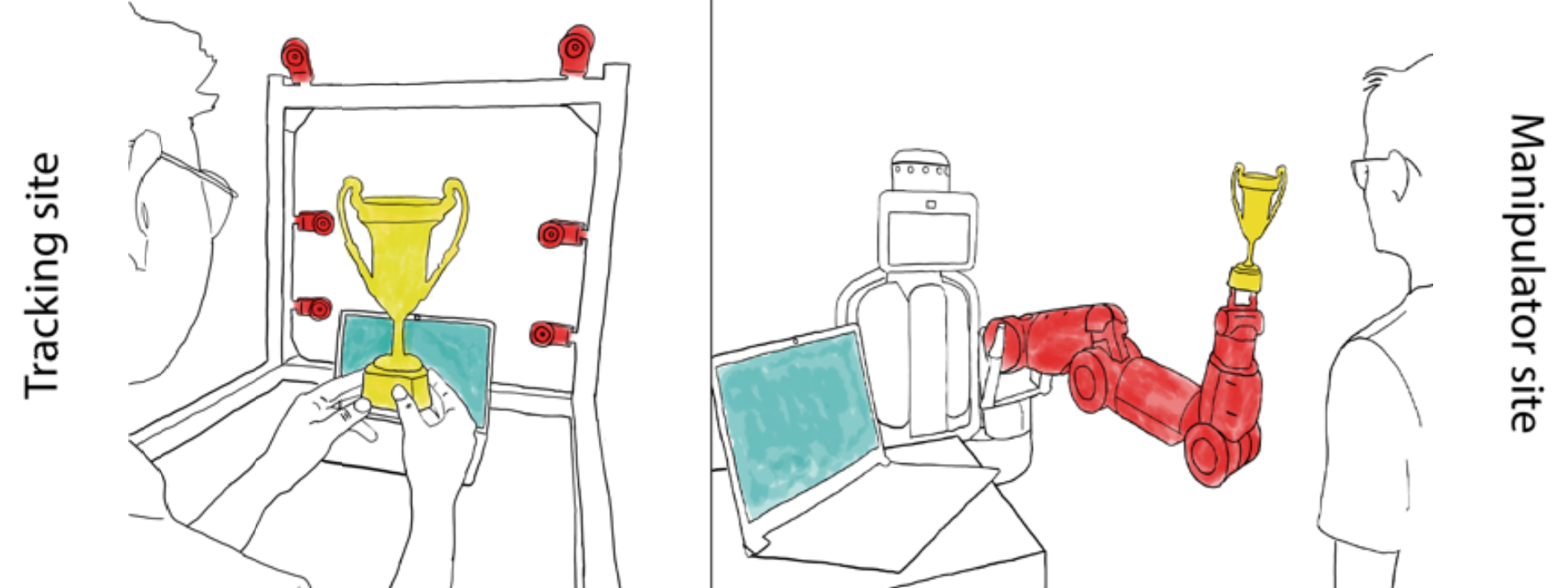

Perspective on and Re-Orientation of Physical Proxies in Object-Focused Remote Collaboration:

Remote collaborators working together on physical object have difficulty building shared understanding of what each person is talking about. Conventional video chat systems are insufficient for many situations because they present a single view of the object in a flattened image.

Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems (CHI-18) Full Paper Honorable Mention Award

See more

To understand how this limited perspective affects collaboration, we designed the Remote Manipulator (ReMa), which can reproduce orientation manipulations on a proxy object at a remote site. We conducted two studies with ReMa, with two main findings. First, a shared perspective is more effective and preferred compared to the opposing perspective offered by conventional video chat systems. Second, the physical proxy and video chat complement one another in a combined system: people used the physical proxy to understand object, and used video chat to perform gestures and confirm remote actions.